Mobile Robot Localization in Indoor Environments Using 3D Camera

Place recognition is one of fundamental problems in mobile robotics which has recently drawn much attention. From a practical point of view, efficient and reliable place recognition solutions covering a wide range of environment types would open the door for numerous applications of intelligent mobile machines in industry, traffic, public services, household etc. From a purely scientific point of view, our human curiosity urges us to find out how close to us an artificial agent can get in the ability to recognize places, which is a task that we perform with ease in everyday life.

The goal of this project is to develop a practically applicable robot vision system for localization of mobile robots in indoor environments. The localization system we develop is designed to process point clouds obtained by a 3D sensor which can be either an RGB-D camera, such as Microsoft Kinect, PrimeSense sensor or ASUS Xtion PRO LIVE, or a point cloud obtained by a LiDAR.

The considered localization system builds an environment map consisting of local models, where each local model represents a set of geometric features represented in the reference frame of this local model. Robot localization is performed by registration of features acquired by a 3D sensor from a particular location in the environment with the local models. Geometric features which the system uses are primarily planar surface segments. In the case where an RGB-D camera is used, the system also uses straight edges of objects/surfaces as additional features. The discussed localization system is based on the pipeline consisting of three main stages: feature detection, hypothesis generation and hypothesis evaluation.

A depth image of a scene acquired by a 3D camera from a particular location in the environment is segmented into sets of 3D points representing approximately planar surface segments using a similar split-and-merge algorithm (Schmitt and Chen, 1991) followed by a hierarchical region merging (Garland et al., 2001). Then, a search for subsets of local model features with similar geometric arrangement as scene features is performed. This is the hypothesis generation stage where the transformation which aligns a subset of local model features with a subset of scene features represents a hypothesis about the camera being positioned at the place corresponding to this local model in a particular pose. Hypothesis generation is performed by construction of an interpretation tree (Grimson, 1990) whose nodes are pairs (scene feature, local model feature) and each path from a tree leaf to the root represents a camera pose hypotheses. High efficiency of the method is achieved by ranking the feature pairs according to their information content and adding them to the tree according to their rank starting from the highest ranked pair. The result of the hypothesis generation process is commonly a rather large set of hypotheses from which a correct hypothesis must be selected. In the hypothesis evaluation stage, the probability of each hypothesis is estimated and the hypothesis with the highest probability is selected as the final solution. The hypothesis evaluation is based on computing the conditional probability that a particular hypothesis is correct if a set of features in particular geometric arrangement is detected in the currently observed scene.

The methodology used in the presented localization system is described in detail in the paper:

R. Cupec, E.K. Nyarko, D. Filko, A. Kitanov, I. Petrovic, Place Recognition Based on Matching of Planar Surfaces and Line Segments. The International Journal of Robotics Research, Vol. 34, No. 4-5, pp. 674-704, 2015.(PDF)

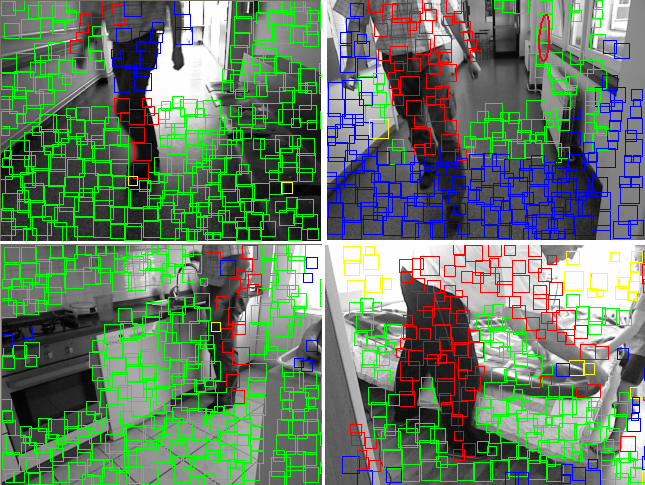

Few examples of correct hypotheses are presented in the figure below, where green squares represent scene surfaces matched with local model surfaces, red squares represent the scene surfaces occluding model surfaces (objects in the scene which are not present in the local model) and blue squares represent scene surfaces which are out of the volume covered by the local model.

Application of color and texture descriptors for improving the performance of the discussed place recognition system is investigated in the paper:

Filko, D.; Cupec, R.; Nyarko, E. K. Evaluation of Color and Texture Descriptors for Matching of Planar Surfaces in Global Localization Scheme. Robotics and autonomous systems. vol. 80, pp. 55–68, 2016.

We provide a benchmark dataset for evaluation of mobile robot localization approaches based on planar surface segments.